Quo vadis, drive-test?

Et tu, rf scanner?

There is no doubt that both the practice of drive-testing, as the mainstay of network optimization, and the market for drive-test tool-making have shrunk dramatically over the last decade or so. The wave of company mergers that resulted in the elimination of many brands culminated in 2009 with the sale by Agilent of their drive-test business. The remaining vendors keep low profile with few new tool announcements. But what is the reason for this decline -- can "SON" be singled out as the main or only factor?

SONs offer three distinct innovations:

- Automation of operator's business processes, including network deployments, maintenance, and optimization; at the moment mainly by transforming previously disjoint databases and servers into a networked business data processing system.

- Adoption of new air protocols, especially 4G, that are much more adaptable to varying environment and loads, i.e.,they are intrinsically able to self-optimize and thus diminish the need for an external optimization procedure.

- More reliance on mobile measurements and less on drive-test data. This trend, though, started before SON made first inroads, but it is being helped by more real-time connectivity in SON/OSS.

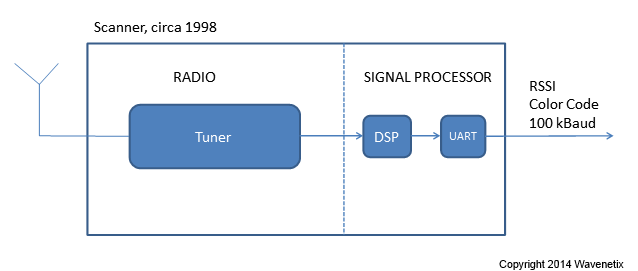

Whatever effect the development of SON has had on the drive-test market, it does not explain everything that occured in the RF scanner market. Indeed, there have been internal reasons for its apparent decline besides the direct assault by SONs. From the start, scanners did all signal processing internally, outputting measurement results in real time: Namely:

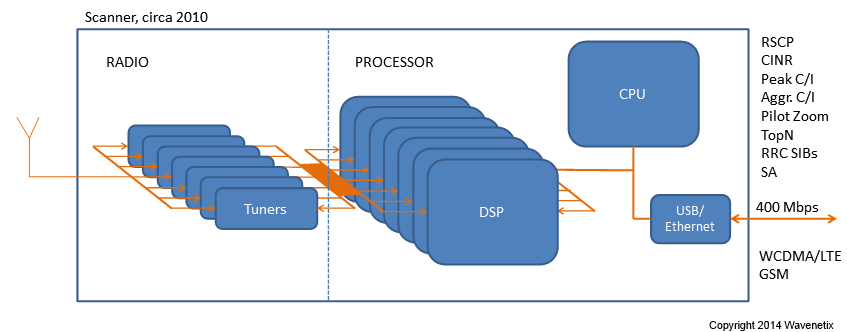

- Scanner architecture evolved along the trajectory that could not be sustained. Originally, DSP processors were used because of their clear advantage before other, general-purpose, CPUs. The advantage was presence of fast multiply-accumulate units (MACs) in their ALUs. A more recent advantage is inclusion of algorithm-specific accelerators on the chip, such as Viterbi or Turbo co-processors. As for the MACs, nowadays general-computing processors (x86/x64 is one example) include them as part of the "graphics" co-processor (various versions of SSE). The hardware DSP accelerators are specific to the standard air protocol processing and not practical for use in more advanced algorithms of signal detection and measurement. Except in the case of mass deployments of standard-based processors (mainly in NodeBs and eNodeBs), the DSP processors lost the war to the superior, massively multicore general-purpose computing platforms.

- As processing requirements have been rising exponentially since the simple days of single-RF-path, single measurement-at-a-time scanners, scanners started look like small supercomputers endowed with multiple DSP chips and cores, control chips of PowerPC class, running semi-custom multitasking OSs capable of maintaining smooth operation of hundreds of concurrent measurement tasks on multiple carrier frequencies. Such scanners are costly to design, maintain, and manufacture.

- Even being as powerful as they are, the modern scanners lack the ability to fully process signals in real time. Instead they rely on a combination of the so-called "signal acquisition" and "signal tracking" modes. Signal acquisition incurs a fuller version of processing that achieves detection in the full dynamic range of the scanner. Since it takes a relatively long processing time, acquisition is invoked only so often, while signal tracking is used to measure acquired signals between acquisition cycles. However, signal tracking will miss any new occurances of other, unacquired signals. These new signals will have to wait until the new acquisition to be discovered -- and then there is no way to go back and track them at the preceding period, since raw signals are not stored in memory and thus are discarded as soon as they are "tracked."

- Even worse, modern communications signals have small spreading factors (TD-SCDMA, HSPA) and/or short fixed "pilots" (preambles, midambles SYNC-DL, etc.). This circumstance causes a dramatic decrease of the dynamic range of detectable signals in presence of interference and noise when using conventional, correlative methods of reception. Whereas the dynamic range for such technologies as CSMA/WCDMA reaches more than 30 dB, the same parameter for 4G technologies, if the processing uses similar algorithms, is slightly higher than just 10 dB. In order to overcome this limitation, more sophisticated methods of reception, such as multiuser joint detection, are much more complex and take longer times, often even several iterations. Required computing power for such real-time processing is above what can be found inside these small boxes.

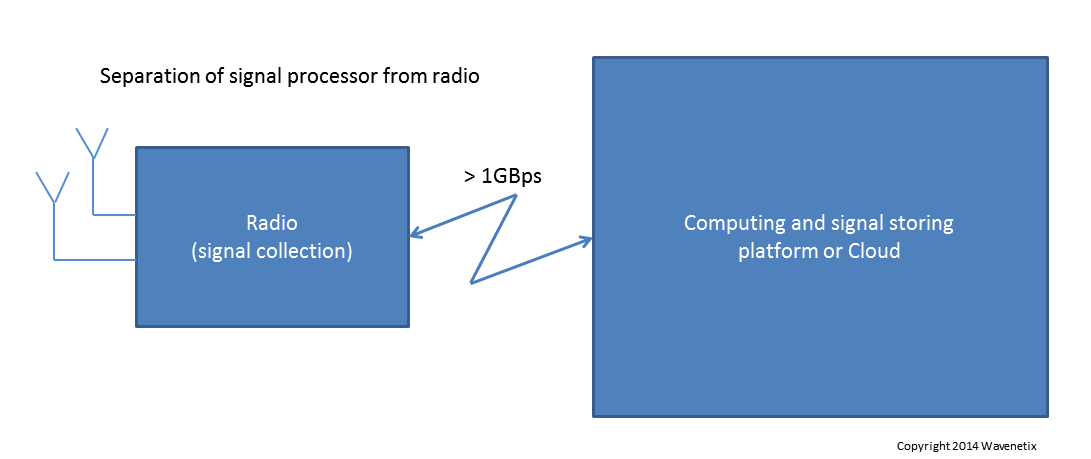

- In addition, a deeper data mining of the signal may require the knowledge of signal parameters in a wide area in order to find weak signals (for example, knowing signal timing helps detect a signal with more confidence). This type of processing requires long-term storage of all signal samples and not only of signal measured parameters. This kind of storage capacity would measure in many gigabytes and preferably in terabytes for reasonably long drives. Theoretically disks of such capacity could be added to a scanner, but why design computers (and all the system software) when they already exist and are readily available? (Indeed commoditized.)

- Another way of looking at the problem is to notice that the computing power of a scanner is not scalable. Although theoretically one could add more processing cards (if the design was based on pluggable boards) when needed, this makes the design even more complicated and even more like re-inventing the wheel. A better way is using commoditized computing facilities for signal processing by separating the radio part and processing part of the scanner, as shown in the picture on the right. This processing facility may be a local host or a data processing cluster somewhere in the "cloud," if there is a fast connection.

There is nothing that precludes connecting more than one radio to the processing "server." And those "radios" could actually be any source of signal or network data, including mobile measurements or OSS KPIs, or small-cell measurement, or any useful data from a C-RAN. Thus the processing part of the former "drive-test tool" becomes an integral part of a future SON system. It should be able to accept drive-test data, when available, but is not centered around drive-testing.

However, even without drive-test data, the optimization system still needs accurate and reliable signal data. The situation here seems to be somewhat reminscent of what happened to the photo camera industry not so long ago. When film camera companies found themselves threatened by the emerging digital technology, some were able to adjust, others tried to defend their turf and lost. So what's the lesson? Indeed the film is gone, but the cameras still sport excellent objective lenses (think: radios) and more and more sophisticated signal processors (signal servers in our case); photography business is booming!